Seven steps to writing a screener survey

How to make sure you are always getting the best participants.

Screener surveys are an excellent tool for the beginning of each research study. Before you jump into recruiting, you can send out this survey to assess potential participant’s backgrounds, behaviors, habits, and other essential characteristics.

Screener surveys are an excellent tool for the beginning of each research study. Before you jump into recruiting, you can send out this survey to assess potential participant’s backgrounds, behaviors, habits, and other essential characteristics.

There are a few reasons we write screener surveys:

- Find and talk to the most relevant participants for your research study

- They help you guarantee you are getting high-value participants

- Ensure return on investment for your research will be as high as possible

- Avoid wasting time/money on suboptimal participants

- Avoid wasting the time of others (ex: users who might not be the best fit)

- Dodge awkward research sessions where the participant cannot meaningfully answer the questions you are asking

I learned about the importance of screener surveys the hard way: through first-hand awkward experiences with the wrong participants for my study. I was researching the process that occurs when people move to a new home or apartment. I wrote my screener survey, which was full of demographic information, and asking a few questions about whether they had moved recently.

We received a good number of responses quickly, and I was happy. They were matching the demographic data we wanted from our target participants. Nothing ticked in my head, saying, “how are we getting so many participants so quickly?” Not to spoil the plot, but it had to do with the screener survey. It was too focused on demographic data and not focused enough on behavior.

So, what happened? I had participants come in, and I asked them my default starting question, “tell me about the last time you moved.” They gave me an overview, and I set to dig into the details:

- Me: “Okay, great, now walk me through the step-by-step process you went through.”

- Participant: “Oh, I wasn’t involved in the process. My partner/real estate agent took care of that. You should probably talk to them.”

- Me (internally): Sh*t.

Some of the people had lied on the screener survey. They responded saying they had moved in the past year. However, some had moved a few years ago. But they knew what I was looking for and the correct answer to participate for the incentive.

I had recruited the wrong participants because of my screener survey. Not all the participants were a bad fit, but an overwhelming majority ended up not being super helpful. I had to redo my screener survey and start recruiting again. That is when I learned, screener surveys:

- Are not all about demographics

- Should focus on behavior and habits

- Need to elicit specific information

- Can’t give away which answer is correct

After that experience, I took my screener surveys much more seriously and learned how to write them correctly.

However, they can be tricky to write

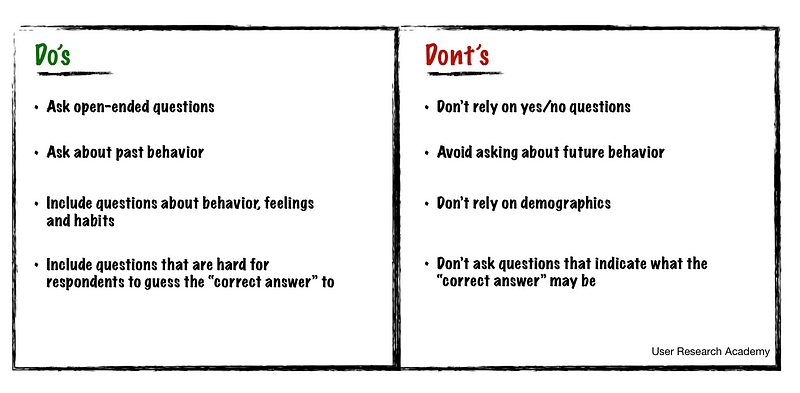

Like most things in user research, we have to consider how we construct screener surveys seriously. Certain questions can backfire on us. You have to achieve a balance within a screener survey:

- Get the right information to qualify the best participants

- Make sure you are not asking obvious questions that would lead people to exaggerate their responses to participate

After a few years, I have done my best to achieve this balance. I always use the following steps before writing and sending out a screener survey.

The seven steps to writing an excellent screener survey

1. List the criteria of your ideal participants. Before you even think about recruiting, it is beneficial to list out all of the different criteria an exemplary participant would have. And think about the information you need from them. For instance, if I had genuinely thought about needing information on the step-by-step moving process, I would have (hopefully) included questions about whether or not they took part in this. It is beneficial to consider:

- Who are your target personas or proto-personas

- What behaviors do you want to understand more?

- What habits are you trying to target?

- What are the goals the user is trying to accomplish?

2. Write one question for each criterion you identified

- For each ideal standard/behavior, write one screener question

For example, if it is essential someone has wanted to move for 60 days; write a question about this behavior

- Make sure to write precise questions that ask for particular behavior patterns or timeframes:

Imprecise criteria: People who have wanted to move for 60 days

Precise criteria: People who have visited 3+ apartments in the past 60 days

3. Focus on how potential participants feel and HAVE behaved

- Focus on their past behavior, as it is the best predictor of future behavior

Future question: Will you look at apartments in the next 60 days?

Past question: Have you looked at 3+ apartments in the past 60 days?

- Ask for only the most basic demographic information that you need, since it makes the survey longer

4. Order your questions carefully (use logic!)

- Ask questions that will quickly weed people out in the beginning

- Prioritize the essential criteria you need the participant to align with before you ask specific questions

For example: Make sure you ask IF someone has thought about moving before asking them how often they have viewed apartments

- Ask about location first if you need in-person interviews

- Use a funnel approach

For example: Start with more broad questions, move into specifics, and then broaden out again with questions like demographic data

5. Avoid leading questions

- Leading questions will influence people to answer in particular ways, and skew your data

- Leading: How great was our coffee?

- Fixed: How would you describe our coffee?

6. Include a mix of open-ended and closed questions to avoid obvious answers

- Using a combination of open and closed questions helps to make sure you are getting the best participants. A 60/40 mix is safe, where 60% of questions are closed, and 40% are open-ended questions.

- Open-ended questions help you see how a participant would answer in their own words, without priming them to respond in a specific way

- Using closed questions in a non-obvious way can help you understand behavior patterns (ex: asking how many times someone viewed apartments in 3 months, and giving a range of answers)

- Adding an open-ended question to your screener survey can give you clues as to how much insight a participant will provide in your actual study

- One word responses, illogical rambling or cagey answers can all indicate that a participant may provide a low return on investment

7. Always include an open text field

- Always make sure you include an open-text area in each multiple-choice question, as it is impossible for you to know you include all options someone might need

- Ex: “other,” “not applicable,” “I don’t know,” or “none of the above”

Let’s go through an example:

We own a plant shop in Brooklyn, New York, where we sell groups of exotic plants. We have been posting our plants on social media, and people from around the United States are contacting us and asking if we have an online shop. We want to consider this as an option but aren’t sure what people would want or expect. We want to conduct user research to understand the following:

- How people would like to purchase plants online

- What do people expect of an online plant store

- What do they think of other online plant stores

- Get their opinion on a prototype of the online plant store

Our screener survey might include questions like:

- Have you purchased plants online? (Yes/No)

If yes:

- How often have you bought a plant online in the past six months? (Multiple choice)

- How many plants have you purchased online in the past six months? (Multiple choice)

- Where did you purchase from? (Short open text)

- How was your experience with buying plants online? (Long open text)

If no:

- Have you considered buying a plant online? (Yes/No)

- If yes, why have you not purchased one online yet? (Open text)

- If no, why have you never thought about it? (Open text)

Feel free to add more thoughts on how you would screen!

For more engaging discussion, please sign up for the User Research Academy slack group! And check out User Research Academy for free resources

If you liked this article, you might also be interested in: